Test & Refine Before Going Live

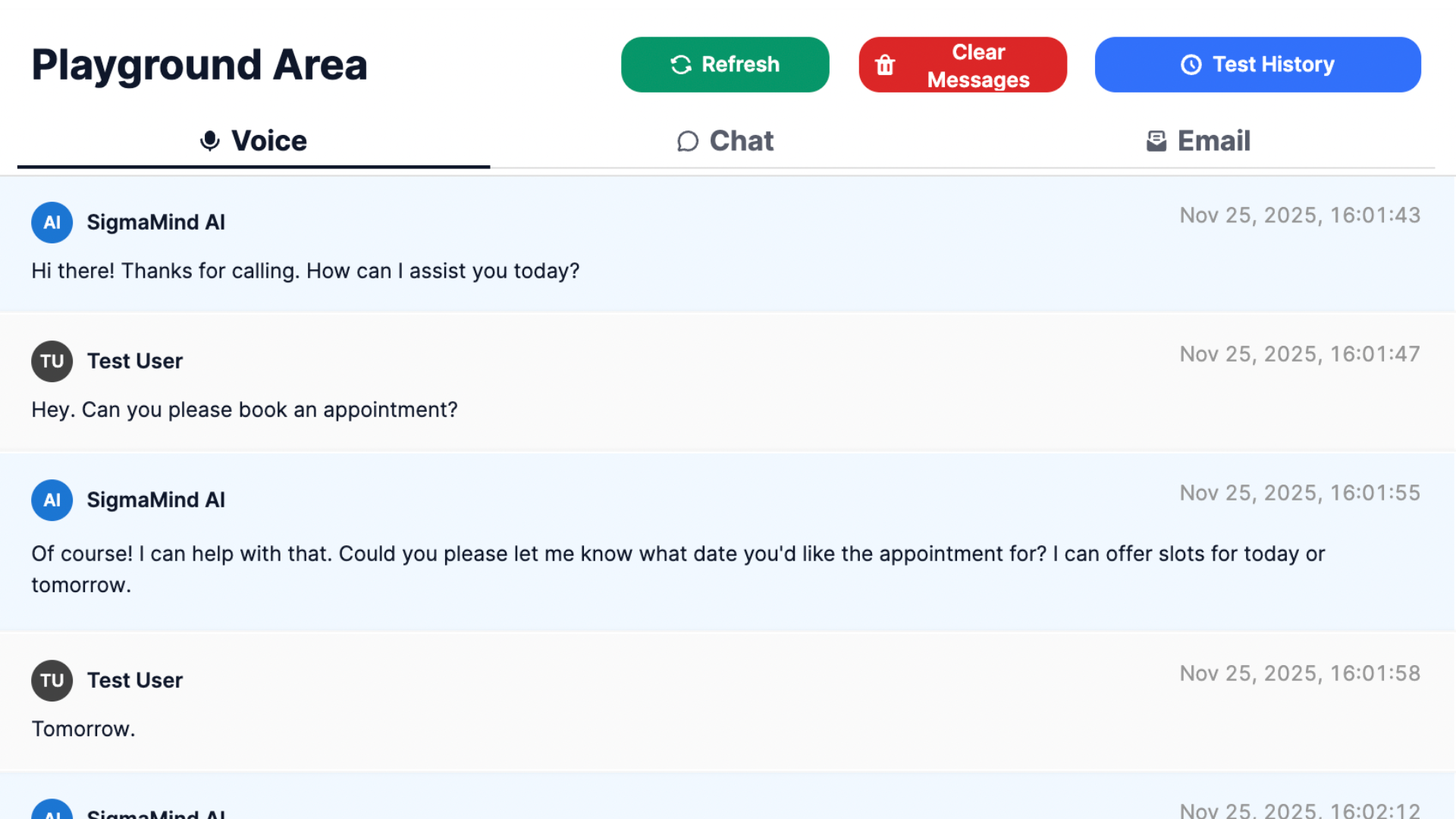

Preview, debug, and perfect every conversational experience before your agent goes live. Playground gives your team a real-time, channel-specific space to validate responses, flows, and integrations.

Why Use the SigmaMind Playground?

Smarter Testing, Faster Launches

Advanced Testing Features in SigmaMind AI Playground

Frequently Asked Questions (FAQs)

What is the Playground in SigmaMind AI, and what can I do with it?

The Playground is an interactive environment where developers can simulate and test conversations across voice, chat, and email before deploying their agents live. It lets you step into the shoes of an end user to see exactly how the AI agent responds across channels. It’s designed to catch bugs, fine-tune behavior, and validate flows under real-world conditions.

Can I test my agents in voice, chat, and email formats?

Yes. The Playground supports all major communication channels: you can switch between chat, voice, and email modes with a single click. This ensures your conversational design and tone are consistent across mediums and gives you full visibility into how your agent handles multimodal interactions.

Can I inspect which AI agent is triggered during a test?

Yes. During any test run, the Playground displays which AI agent or subflow is currently active, so you know exactly which logic is executing. If you’re chaining multiple agents or using fallback flows, this visibility helps ensure each one behaves as expected.

How are variables managed and displayed during testing?

All runtime variables—like user name, issue type, session time, intent labels, API payloads—are visible in a structured panel. You can track how values are updated as the conversation progresses. This is critical for verifying if branching, conditions, and API calls are working as intended.

Can I test new agents without affecting the live environment?

Absolutely. The Playground operates in a safe, isolated test environment. None of the changes or test runs here affect live users. You can safely try new logic, simulate edge cases, and experiment freely before publishing.

Is it possible to simulate real-time user sessions in voice and chat?

Yes. In voice mode, the Playground simulates TTS-based calls with live transcription. In chat mode, you can type user inputs as if you're a real customer. This makes it easy to test how the AI agent responds to varied user behavior in real time.

How do I know when my AI agent is ready to go live?

Once your test cases pass, variables behave as expected, and all branches are verified across voice/chat/email—your AI agent is ready. The Playground acts as the final checkpoint before deployment, ensuring quality, reliability, and omnichannel consistency.